GIF2Video: Color Dequantization and Temporal Interpolation of GIF images

Yang Wang1 Haibin Huang2 Chuan Wang2 Tong He3 Jue Wang2 Minh Hoai1

1Stony Brook University 2Megvii (Face++) USA 3UCLA

Accepted by CVPR 2019

arXiv https://arxiv.org/abs/1901.02840

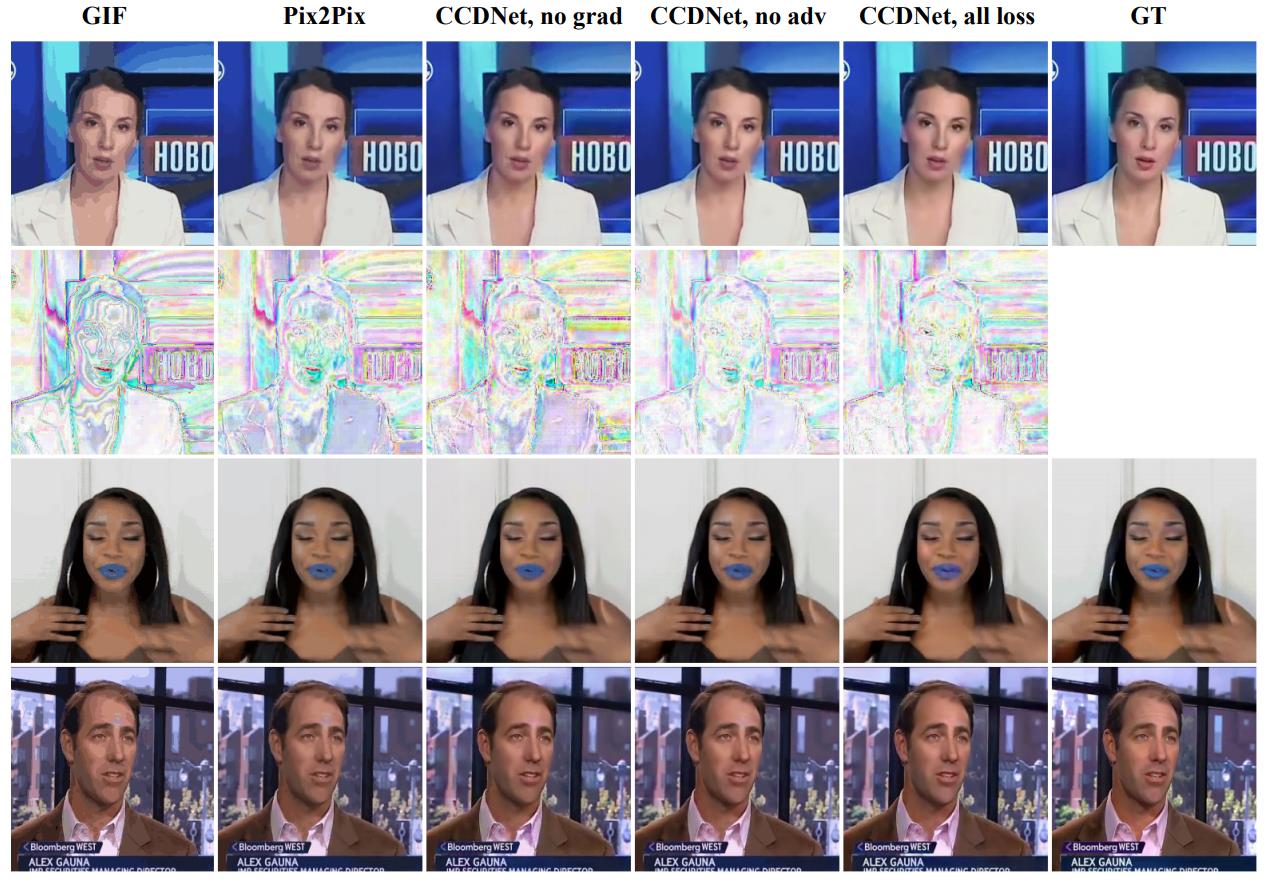

Figure: Qualitative Results of GIF Color Dequantization on GIF-Faces. Pix2Pix and CCDNet trained without image gradientbased losses cannot remove quantization artifacts such as flat regions and false contours very well. Training CCDNet with adversarial loss yields more realistic and colorful images (see the color of the skin and the lips). Best viewed on a digital device.

Figure: Qualitative Results of GIF Color Dequantization on GIF-Faces. Pix2Pix and CCDNet trained without image gradientbased losses cannot remove quantization artifacts such as flat regions and false contours very well. Training CCDNet with adversarial loss yields more realistic and colorful images (see the color of the skin and the lips). Best viewed on a digital device.

Abstract

Graphics Interchange Format (GIF) is a highly portable graphics format that is ubiquitous on the Internet. Despite their small sizes, GIF images often contain undesirable visual artifacts such as flat color regions, false contours, color shift, and dotted patterns. In this paper, we propose GIF2Video, the first learning-based method for enhancing the visual quality of GIFs in the wild. We focus on the challenging task of GIF restoration by recovering information lost in the three steps of GIF creation: frame sampling, color quantization, and color dithering. We first propose a novel CNN architecture for color dequantization. It is built upon a compositional architecture for multi-step color correction, with a comprehensive loss function designed to handle large quantization errors. We then adapt the SuperSlomo network for temporal interpolation of GIF frames. We introduce two large datasets, namely GIF-Faces and GIF-Moments, for both training and evaluation. Experimental results show that our method can significantly improve the visual quality of GIFs, and outperforms direct baseline and state-of-the-art approaches.

Downloads

|

Bibtex

@inproceedings{wang2019gif2video,

title={GIF2Video: Color Dequantization and Temporal Interpolation of GIF images},

author={Wang, Yang and Huang, Haibin and Wang, Chuan and He, Tong and Wang, Jue and Hoai, Minh},

booktitle={arXiv preprint 1901.02840},

year={2019}

}